Insight

Spatial sound is best understood through embodied interaction. When users can directly map physical gestures to spatial changes, they begin to reason about sound position as a spatial object rather than an abstract parameter. A minimal but expressive control set is more effective than dense interfaces for fostering curiosity and learning.

Solution

Tangible Interaction Model

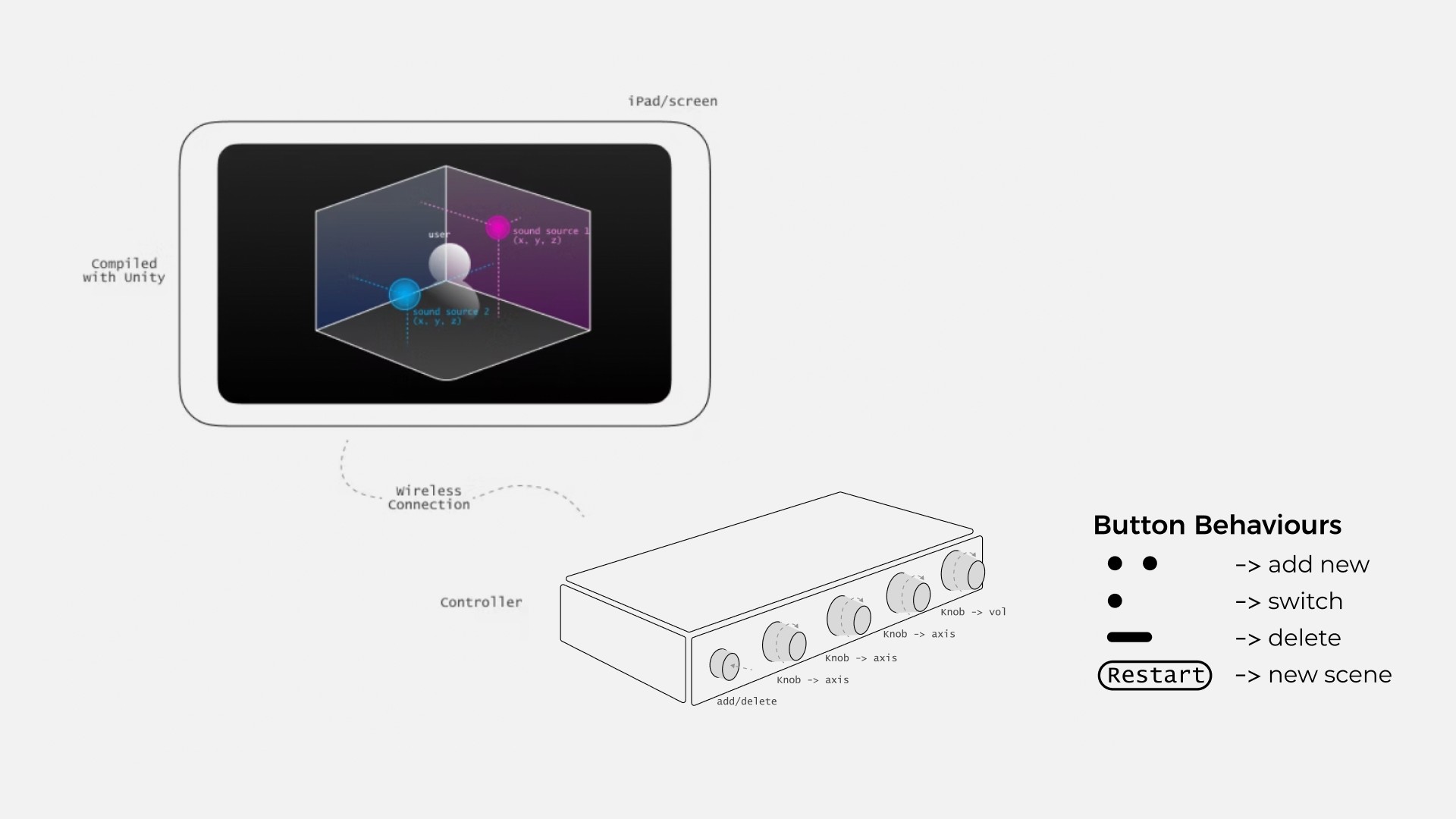

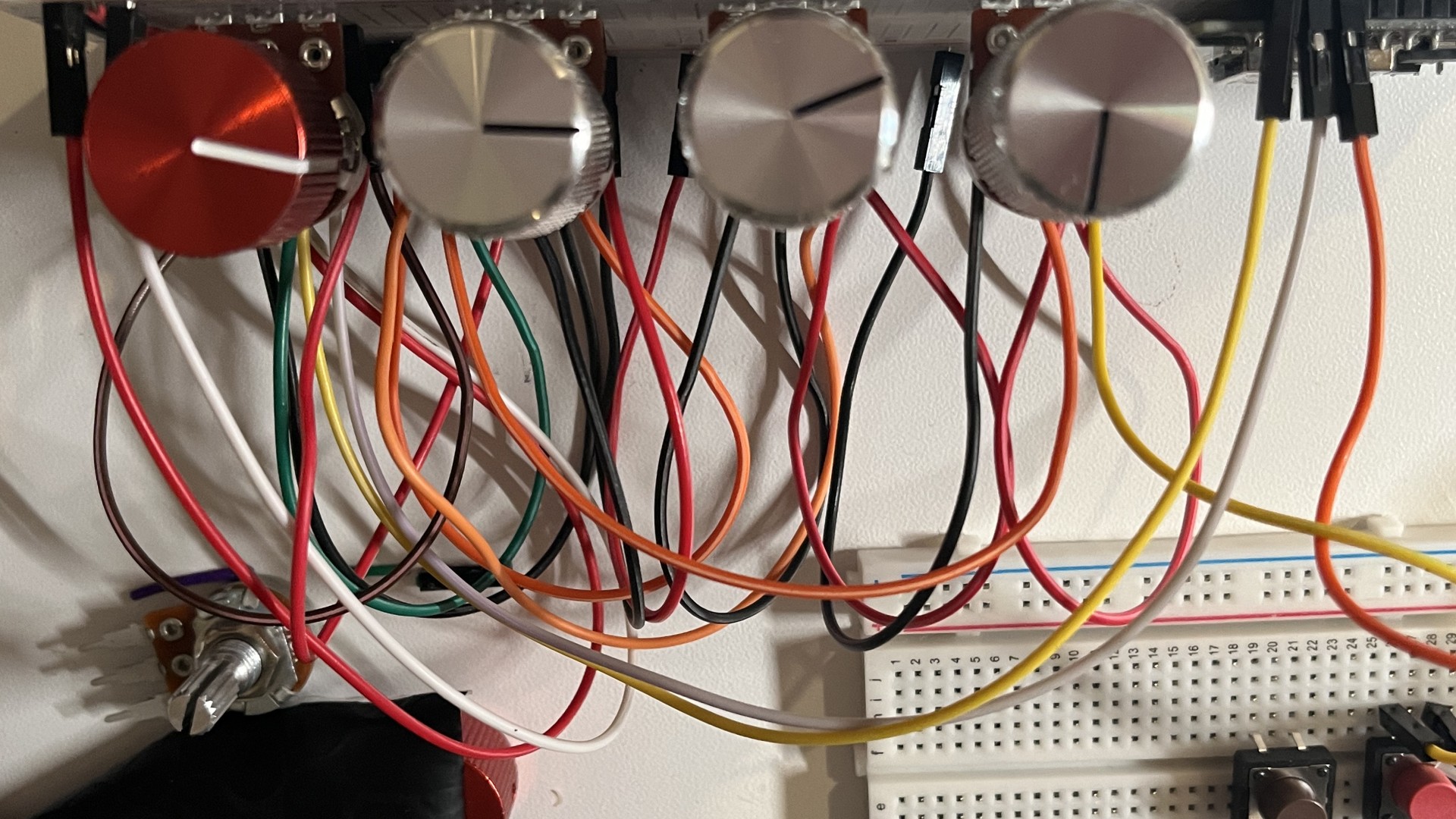

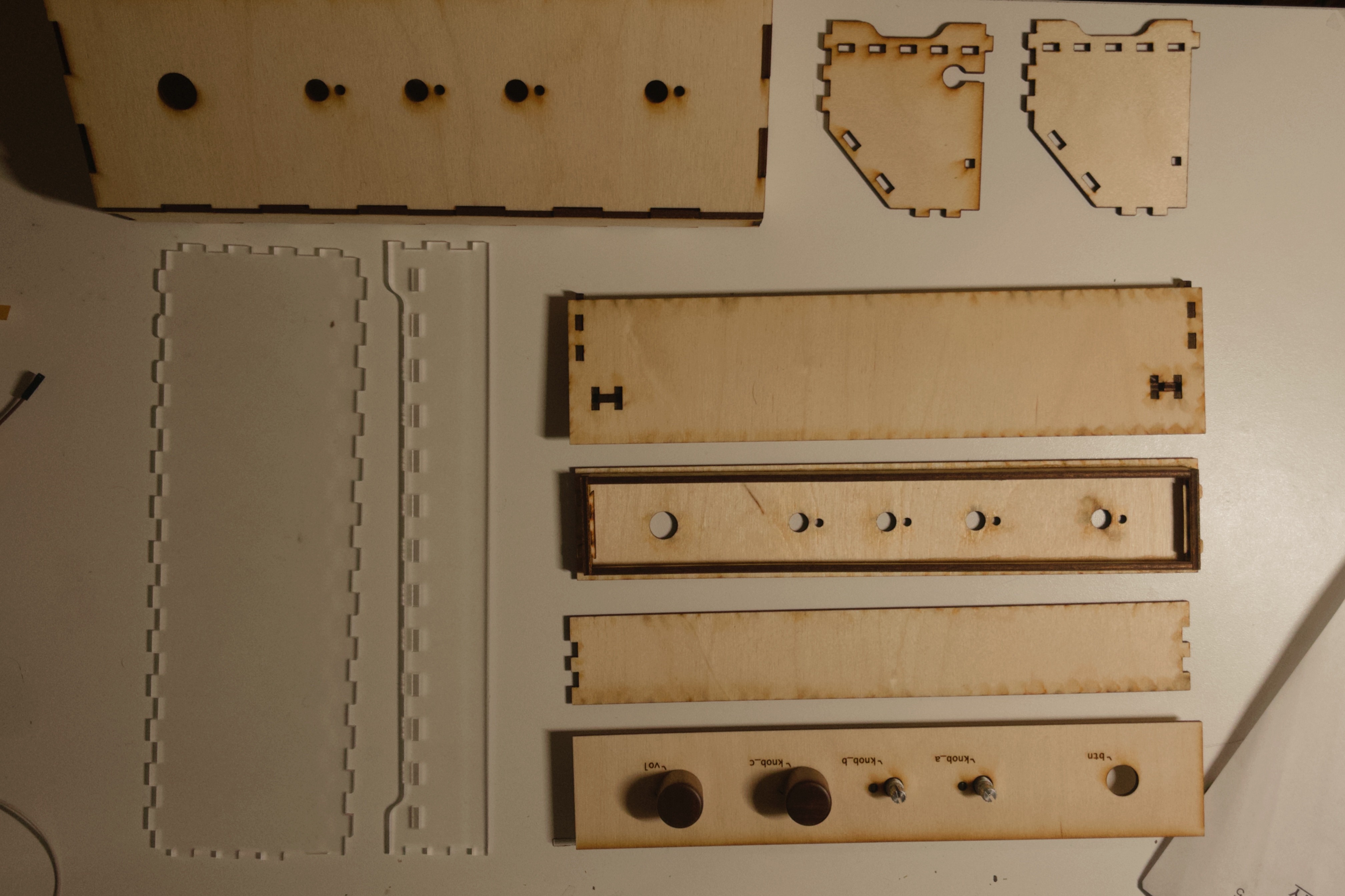

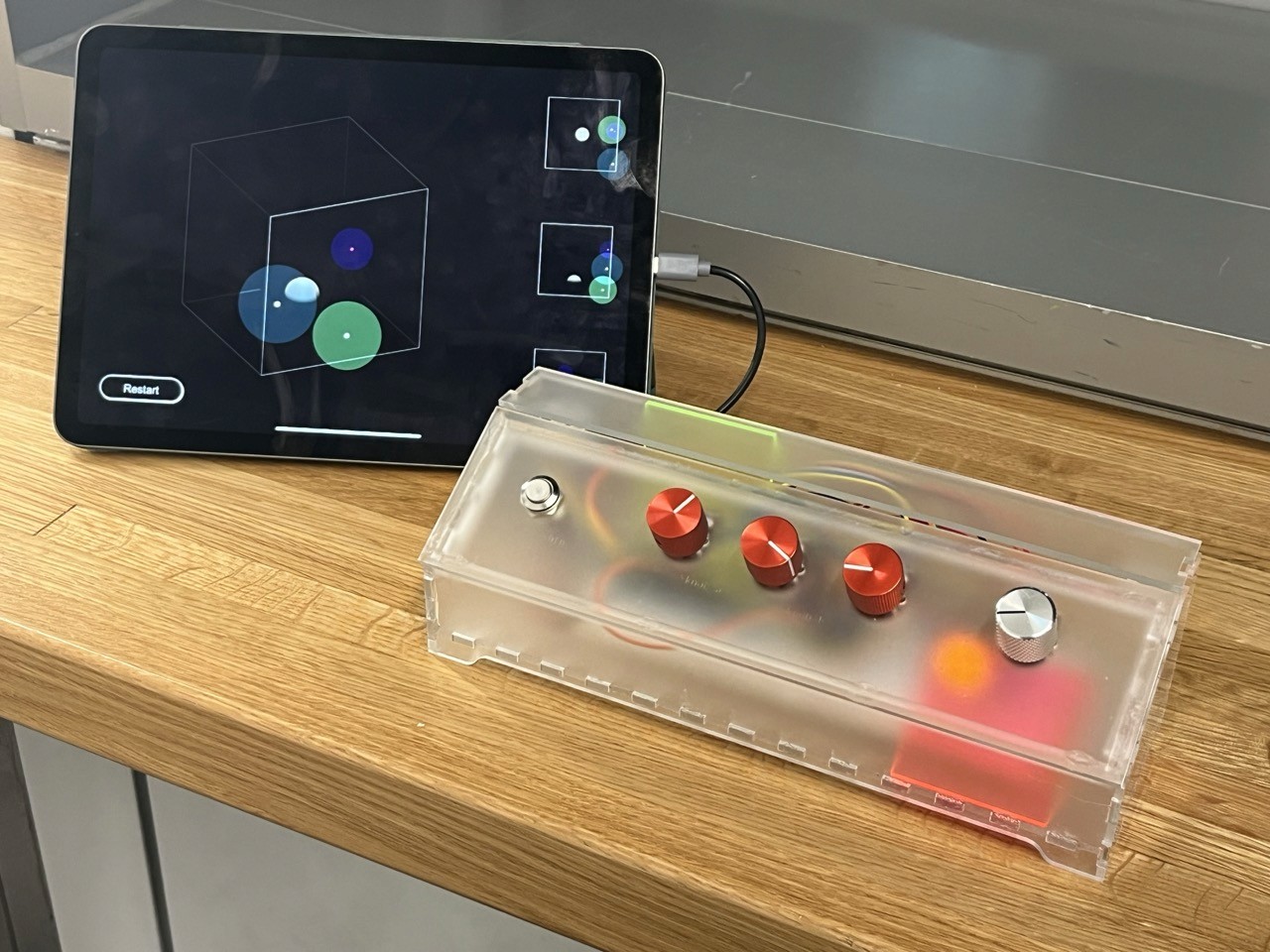

Sound Bubbles uses four precision knobs and a multifunctional push button as its primary interface. Three knobs map directly to the X, Y, and Z position of a sound source, allowing users to “place” sound in space through continuous rotation. A fourth knob controls volume, maintaining separation between spatial and loudness control.

The push button manages sound sources through temporal gestures: a single press switches between sources, a double press adds a new source, and a long press removes the current one. This layered interaction model enables complex control without increasing hardware complexity.

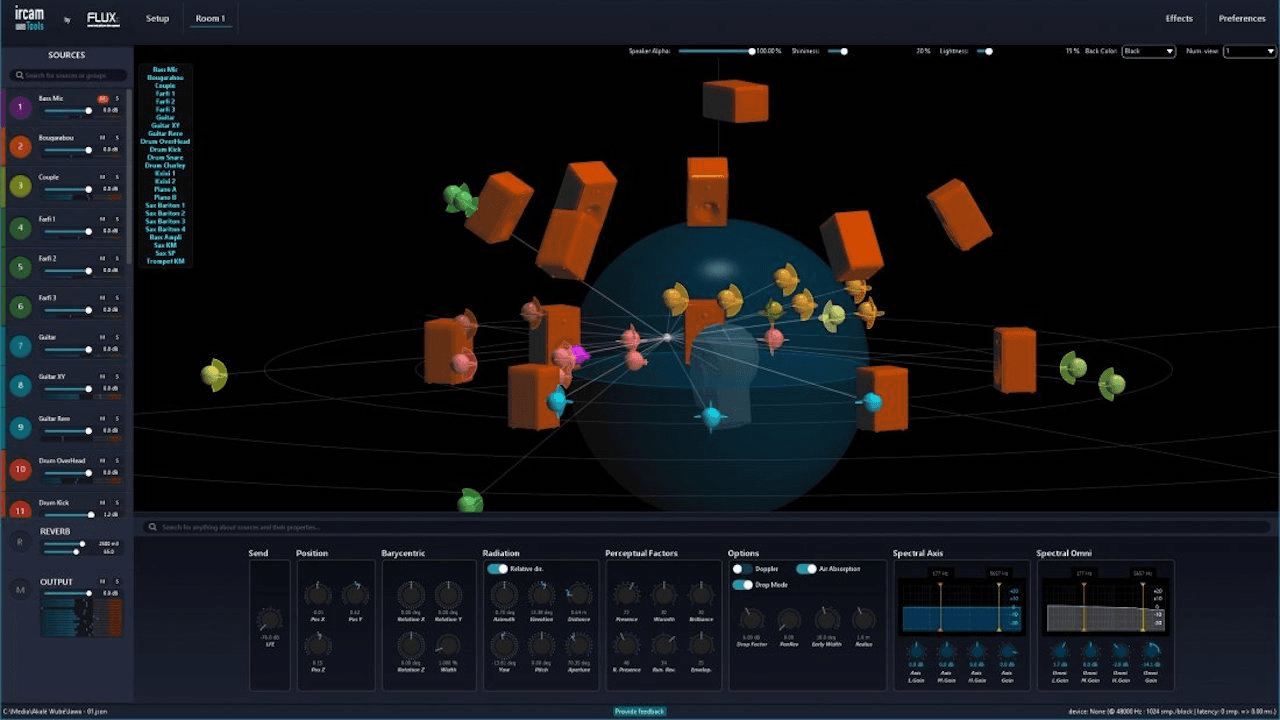

Spatial Audio and Visual Feedback

User actions are reflected simultaneously in sound and visuals. As knobs are adjusted, changes are visualized on an iPad and within a Unity-based 3D environment, reinforcing the relationship between physical input, spatial position, and perceived audio movement.

A brief onboarding guide introduces basic interactions while leaving room for open-ended exploration.

System Implementation

The physical controller reads analog values from potentiometers via an ESP8266 microcontroller, which acts as a WiFi access point. Control data is transmitted wirelessly to Unity, where it is parsed and mapped to real-time spatial audio rendering and visualization.

This modular architecture allows the system to scale from simple prototypes to more complex spatial scenes, and leaves room for future versions that could operate without Unity using embedded audio platforms.