Insight

Early explorations focused on existing spatial audio toolchains, including ICST Ambisonics Tools in Max/MSP, which offer powerful higher-order Ambisonics processing and precise 3D sound field control.

While these tools perform well in controlled or stationary listening scenarios, they proved difficult to integrate into environments with mobile listeners. Maintaining accurate spatial perception required repositioning the entire sound field relative to the listener, introducing significant computational overhead and latency during movement.

This exploration revealed a fundamental limitation: many spatial audio systems prioritize sound field representation over listener mobility. Accurate spatial rendering breaks down not because of audio algorithms, but because listener position cannot be updated reliably and continuously in real space.

From this, it became clear that spatial audio quality depends as much on tracking infrastructure as on rendering techniques. A system designed around precise, low-latency positional tracking, rather than camera-based or stationary assumptions, was necessary to support large, walkable environments.

Solution

Position Tracking

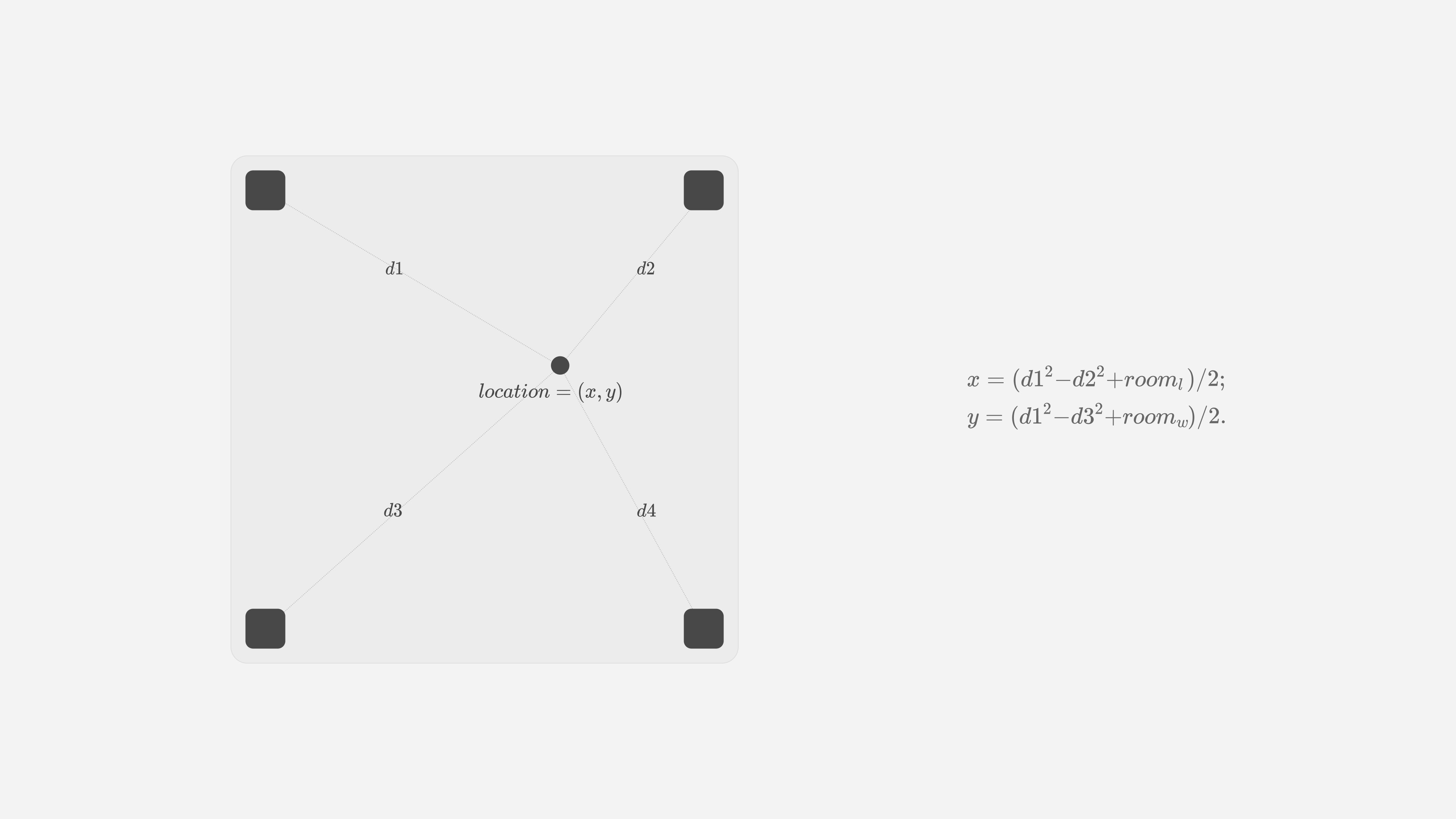

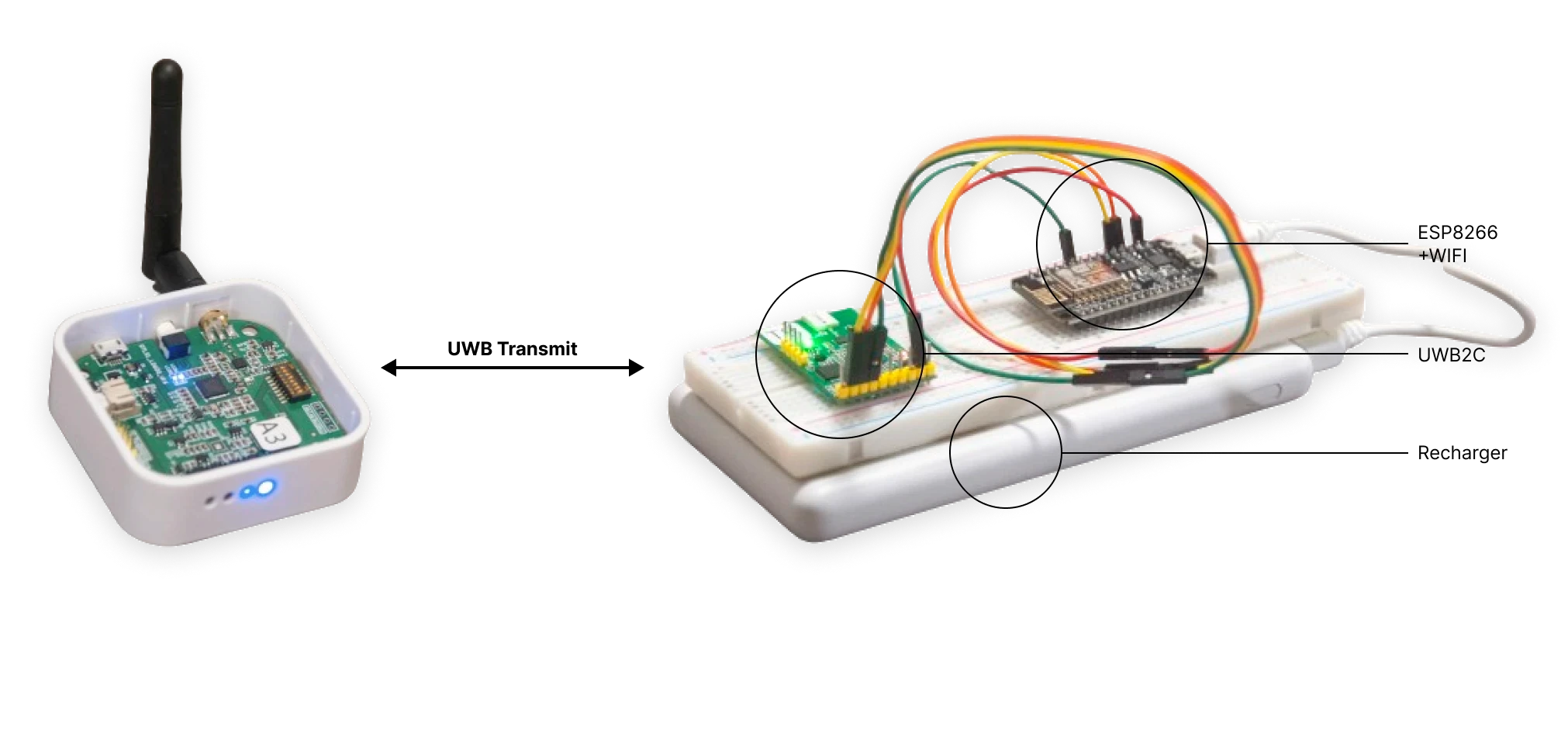

The system uses UWB anchors arranged in a triangulation setup and wearable UWB tags controlled by microcontrollers. By calculating signal time-of-flight, the system continuously estimates listener position with high precision across spaces up to tens of meters.

Data Transmission

Listener position data is transmitted wirelessly to Unity using an optimized UDP protocol. Data packets are minimized and update intervals tightly controlled to maintain low latency and stable real-time performance during movement.

Spatial Audio Rendering

Unity and Steam Audio are used to render object-based spatial sound with dynamic listener positioning. As the listener moves, spatial parameters such as direction, distance, and room effects are recalculated in real time using HRTF-based binaural rendering.

Scalable Spatial Design

Sound sources are distributed as independent objects within the virtual scene, allowing flexible spatial composition. The system scales from small rooms to large environments, supporting multi-zone layouts and complex spatial narratives.

Outcome

The framework was tested across rooms of increasing size, demonstrating significantly improved spatial clarity and immersion in larger environments. Users reported a strong and intuitive connection between physical movement and perceived sound location.