Insight

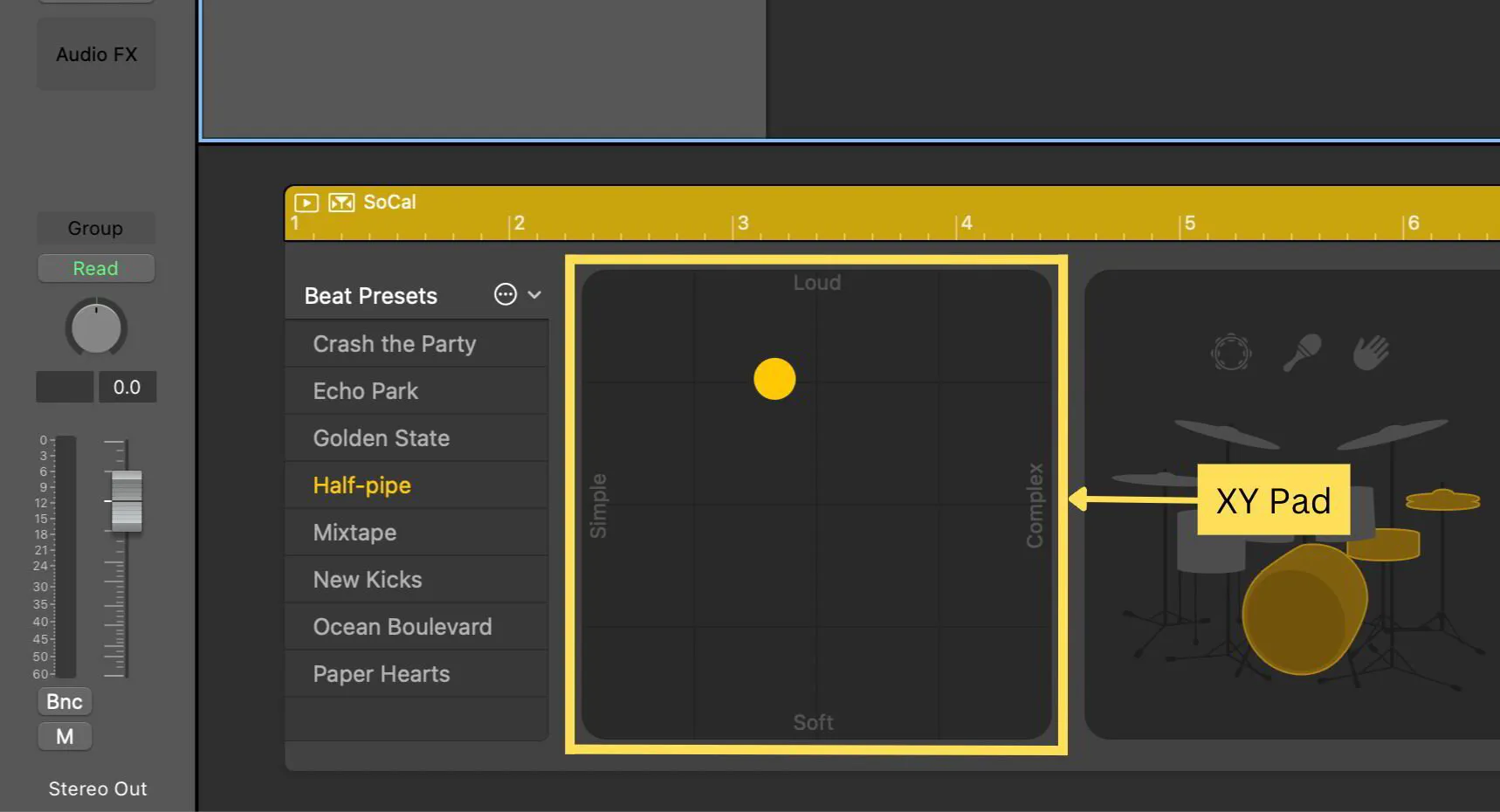

Inspiration from Logic Pro’s XY Pad

The interaction model of SoniBox was inspired by the XY Drum Pad in Logic Pro, where two-dimensional touch input is mapped to multiple sonic parameters simultaneously. Users could explore timbre and rhythm intuitively by moving their hands, without needing to understand the underlying synthesis logic.

This interaction suggested that generative sound systems could benefit from a similar spatial metaphor. Instead of exposing parameters directly, mapping sound behavior to a continuous 2D space allows users to “navigate” sound emotionally and perceptually.

Interaction Design

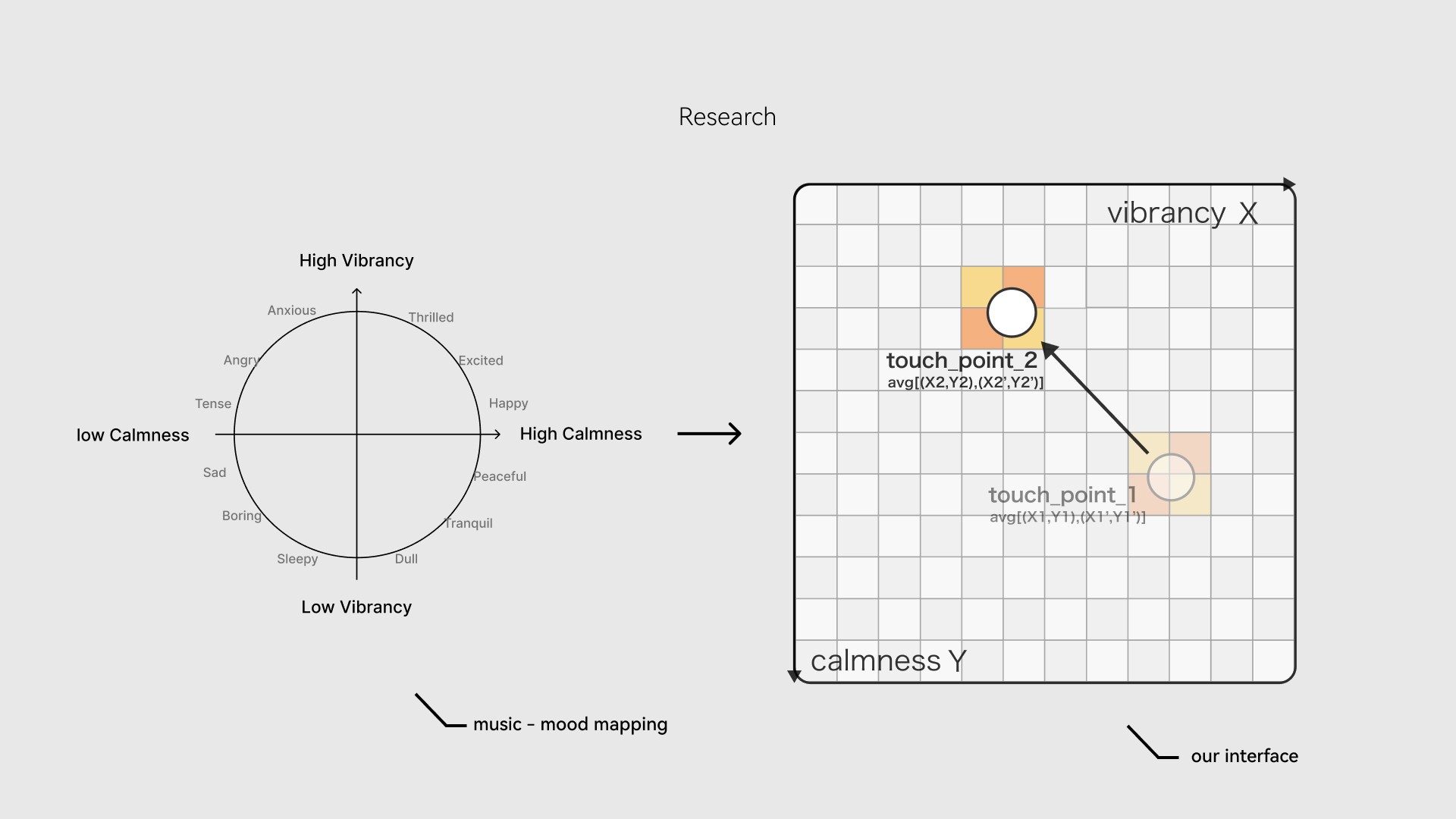

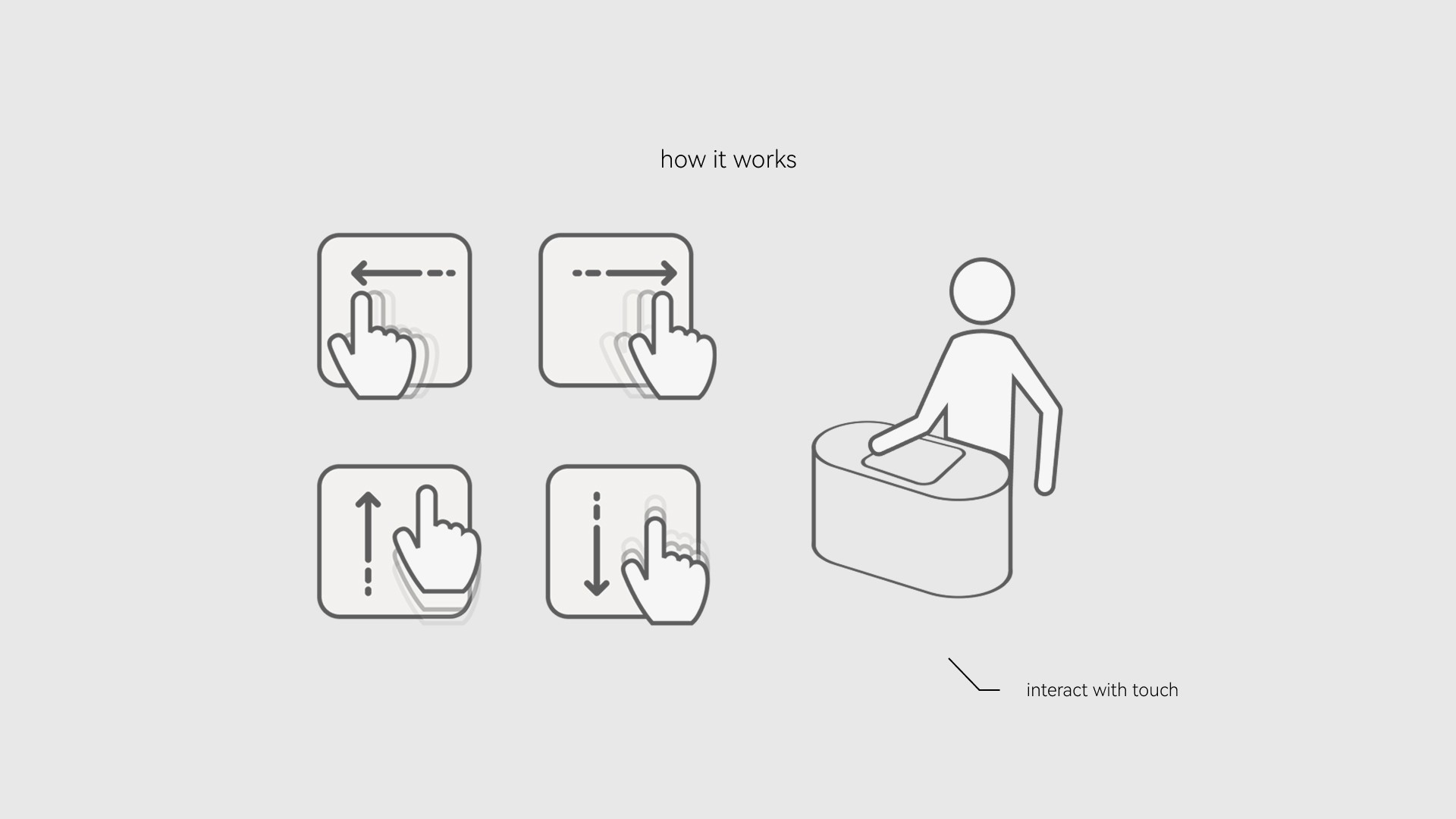

The interaction design of SoniBox went through several iterations to explore how physical touch could meaningfully shape generative sound without becoming either too literal or too abstract. Early prototypes exposed low-level sound parameters directly, but user testing showed that participants struggled to associate technical controls with emotional outcomes. Small changes in input often led to unpredictable or imperceptible sonic differences, breaking the sense of agency.

Through iteration, the interaction was reframed from parameter control to emotional navigation. Instead of adjusting synthesis values explicitly, touch position on the textile surface was mapped to higher-level affective dimensions: calmness and vibrancy. This shift reduced cognitive load and allowed users to explore sound through continuous movement rather than discrete actions.

System Design

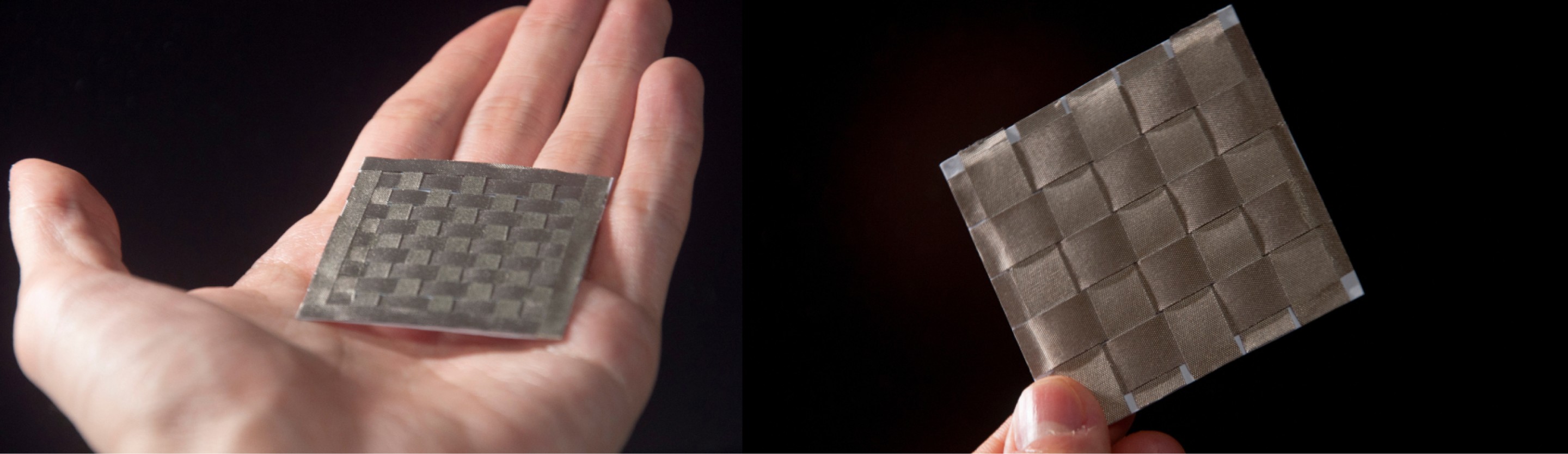

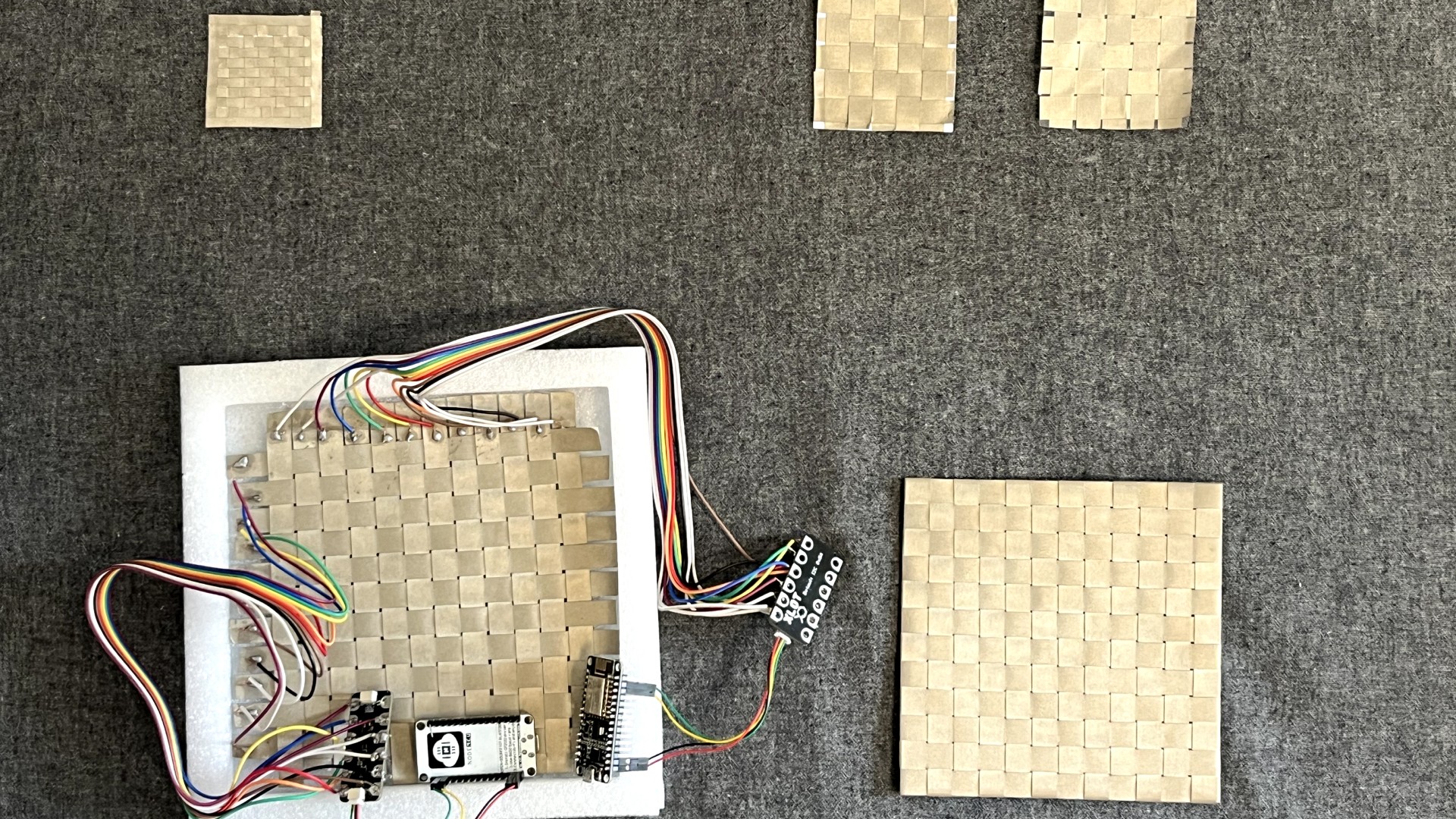

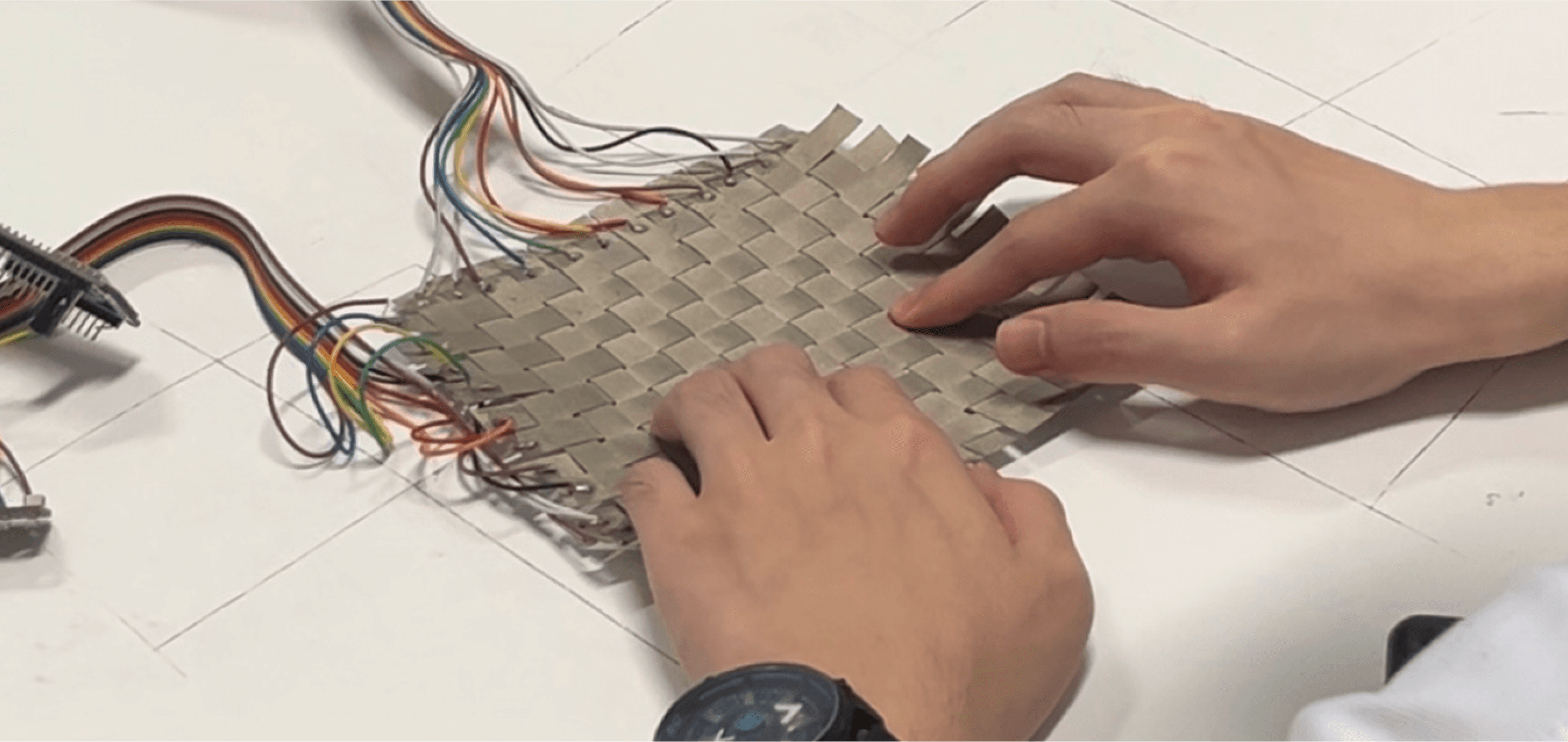

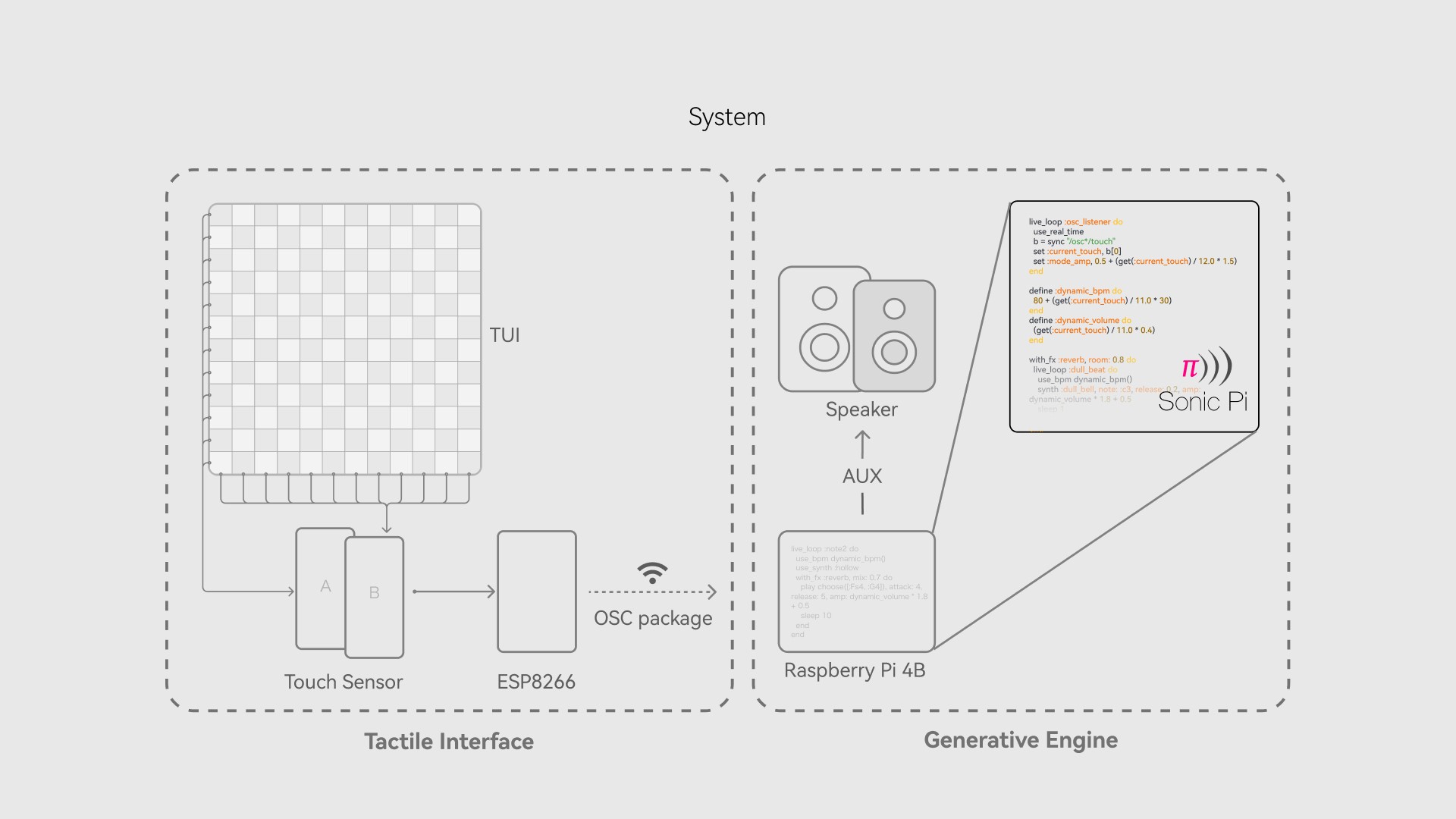

SoniBox is implemented as a distributed embedded system that separates interaction sensing from sound generation while maintaining real-time responsiveness. Touch input is captured through a textile-based x–y grid made of conductive fabric, with ESP microcontrollers continuously sampling touch location and pressure-related signals. This data is streamed to a Raspberry Pi via Open Sound Control (OSC), enabling low-latency, continuous communication between hardware and software layers.

On the audio side, Sonic Pi is used as the generative sound engine, chosen for its ability to support live-coded, algorithmic sound synthesis with precise timing control. Incoming interaction data does not trigger discrete events, but instead modulates ongoing generative processes such as density, texture, and spectral balance. This approach avoids repetitive playback and allows the soundscape to evolve smoothly over time.